Petrochemical Science - Juniper Publishers

Abstract

Chemists have traditionally relied on experiments to gather data and analyze it in order to advance their understanding of chemistry. However, since the 1960s, computer methods have been developed to assist chemists in this process, leading to the emergence of a new discipline known as chemo-informatics. This field has found applications in various areas such as drug discovery, analytical chemistry, and material science. One approach that has gained momentum in recent years is the use of artificial intelligence (AI) in chemistry. AI has been employed for tasks such as predicting molecular properties, designing new molecules, and validating proposed retrosynthesis and reaction conditions. Its use has led to significant progress in drug discovery R&D by reducing costs and time-related problems. Despite these advancements, the concept of AI in chemistry remains relatively unexplored. The use of artificial intelligence (AI) in chemistry has experienced significant growth in recent years. Both journal and patent publications have seen a substantial increase, particularly since 2015. Analytical chemistry and biochemistry have shown the greatest integration of AI, with the highest growth rates. In summary, this review provides a comprehensive overview of how AI has progressed in various fields of chemistry and aims to provide insight into its future directions for scholarly audiences.

Keywords: Artificial Intelligence; Chemistry; Synthesis; Chemical Compounds; Glaser’s Choice Theory; Game Theory

Abbreviations: QSPR: Quantitative Structure Property; QSAR: Quantitative Structure Activity Relationships; ANN: Artificial Neural Networks; DNN: CASD: Deep Neural Networks; Computer-Assisted Synthesis Design; ML: Machine Learning; DL: Deep Learning; MDP: Markov Decision Processes; POSG: Partially Observable Stochastic Game

Introduction

When I was an undergraduate student, I always had problems with the synthesis of chemical compounds! I always wondered why we should preserve the method of synthesizing different materials somehow! This problem bothered me to the point that, at the time when the new generation of smart phones was beginning to emerge, it led me to the idea of finding a way to computerize the raw materials of chemical compounds to combine with each other and get the desired product. Actually, I was thinking of an algorithm! At that time, I still didn’t know anything about programming and data science; also, machine learning or artificial intelligence had not been so developed. My idea remained sterile until I met artificial intelligence; this is the beginning of a new era of chemistry!

Chemistry in Times of Artificial Intelligence

In 1956, John McCarthy coined the term “artificial intelligence” to refer to the branch of computer science concerned with machine learning processes that can perform tasks typically requiring human intelligence. This involves replicating human intelligence in machines, which has become a crucial aspect of the technology industry due to its ability to collect and analyze data at low cost while ensuring a safe working environment. Artificial intelligence has numerous applications, including natural language processing, reasoning, and strategic decision-making. It can also modify objects based on specific requirements. Artificial intelligence is not limited to engineering but also has many applications in the chemical field. It is useful for designing molecules and predicting their properties such as melting point, solubility, stability, HOMO/LUMO levels, and more. Additionally, artificial intelligence aids in drug discovery by determining molecular structures and their effects on chemicals. This process is time-consuming due to its multi-objective nature; however, artificial intelligence can expedite it by utilizing previously available datasets. In summary, artificial intelligence is a critical component of modern technology that enables machines to replicate human intelligence and perform complex tasks. Its applications extend beyond engineering into fields such as chemistry where it aids in drug discovery and molecular design.

This combination allows for the development of advanced water treatment systems that are highly efficient and effective in removing pollutants from water sources. By using nanotechnology, it is possible to create materials with unique properties that can selectively remove specific pollutants from water. Artificial intelligence can be used to optimize the performance of these materials and ensure that they are used in the most effective way possible. Furthermore, this technology can also be used to monitor water quality in real-time, allowing for early detection of potential contamination events. This is particularly important in areas where access to clean water is limited or where there are concerns about the safety of drinking water. Overall, the combination of artificial intelligence and nanotechnology has the potential to revolutionize the way we treat and manage our water resources. It offers a powerful tool for addressing some of the most pressing environmental challenges facing our society today.

Chemistry has experienced a significant increase in data, which coincided with the advent of powerful computer technology. This allowed for the use of computers to perform mathematical operations, such as those required for quantum mechanics, which is the foundation of chemistry. This deductive learning approach involves using a theory to produce data. However, it was also discovered that computers can be used for logical operations and software can be developed to process data and information, leading to inductive learning. For instance, understanding the biological activity of a compound requires knowledge of its structure. By analyzing multiple sets of structures and their corresponding biological activities, one can generalize and gain knowledge about the relationship between structure and biological activity. This field is known as chemoinformatics and emerged from computer methods developed in the 1960s for inductive learning in chemistry.

Initially, methods were developed to represent chemical structures and reactions in computer systems. Subsequently, chemometrics, a field that combined statistical and pattern recognition techniques, was introduced for inductive learning. These methods were applied to analyze data from analytical chemistry. However, chemists also sought to address complex questions such as:

1. Determining the necessary structure for a desired property;

2. Synthesizing the required structure;

3. Predicting the outcome of a reaction.

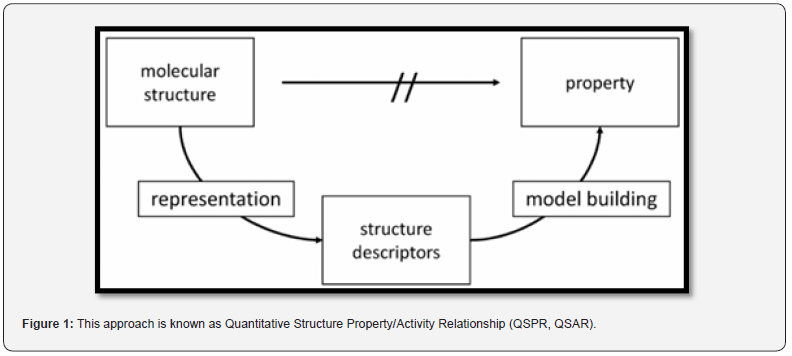

To predict properties quantitatively, researchers established quantitative structure property/activity relationships (QSPR and QSAR). Early efforts were made to develop computer-assisted synthesis design tools. Finally, automatic procedures for structure elucidation were needed to answer the third question.

Artificial Intelligence

Early on, it was recognized that developing systems for predicting properties, designing synthesis, and elucidating structures in chemistry would require significant conceptual work and advanced computer technology. Consequently, emerging methods from computer science were applied to chemistry, including those under the umbrella of artificial intelligence. Publications such as “Applications of Artificial Intelligence for Chemical Inference” emerged from the DENDRAL project at Stanford University, which aimed to predict compound structures from mass spectra. Despite collaboration between esteemed chemists and computer scientists and extensive development efforts, the DENDRAL project was eventually discontinued due to various reasons, including the waning reputation of artificial intelligence in the late 1970s.However, recent years have seen a resurgence of artificial intelligence in general and its application in chemistry in particular. This is due to several factors such as the availability of large amounts of data, increased computing power, and new methods for processing data. These methods are often referred to as “machine learning,” although there is no clear distinction between this term and artificial intelligence.

Databases

Initially, various computer-readable representations of chemical structures were explored for processing and building databases of chemical structures and reactions. Linear notations were preferred due to their conciseness but required a significant set of rules for encoding. However, with the rapid development of computer technology, storage space became more easily and cheaply available, enabling the coding of chemical structures in a manner that opened up many possibilities for structure processing and manipulation. Eventually, the representation of chemical structures by a connection table - lists of atoms and bonds - became the norm. This allows for atomic resolution representation of structure information and access to each bond in a molecule. Despite this shift, one linear code - SMILES notation - remains widely used due to its ease of conversion into a connection table and suitability for sharing chemical structure information online. A molecular structure can be viewed as a mathematical graph. To store and retrieve chemical structures appropriately, various graph theoretical problems had to be solved such as unique and unambiguous numbering of atoms in a molecule, ring perception, tautomer perception, etc. The use of connection tables enabled the development of methods for full structure, substructure, and similarity searching.

Prediction of Properties

This approach is known as quantitative structure-activity relationship (QSAR) modeling. QSAR models have been successfully used to predict a wide range of properties, including toxicity, bioactivity, and physical properties such as solubility and melting point. Another approach to predicting properties is through the use of machine learning algorithms. These algorithms can analyze large amounts of data and identify patterns that can be used to make predictions. Machine learning has been applied to a variety of chemical problems, including drug discovery and materials design. Overall, the ability to predict chemical properties is essential for drug discovery, materials science, and many other fields. The development of methods for processing chemical structures and building databases has enabled the creation of powerful tools for predicting properties and accelerating scientific discovery (Figure 1).

This approach is known as Quantitative Structure Property/ Activity Relationship (QSPR, QSAR). Many different methods for the calculation of structure descriptors have been developed. They represent molecules with increasing detail: 1D, 2D, 3D descriptors, representations of molecular surface properties, and even taking account of molecular flexibility. Also, quite a variety of mathematical methods for modeling the relationship between the molecular descriptors and the property of a compound are available. These are the inductive learning methods and are sometimes subsumed by names like data analysis methods, machine learning, or data mining. They comprise methods like a simple multi-linear regression analysis, a variety of pattern recognition methods, random forests, support vector machines, and artificial neural networks. Artificial neural networks (ANN) try to model the information processing in the human brain and offer much potential for studying chemical data.

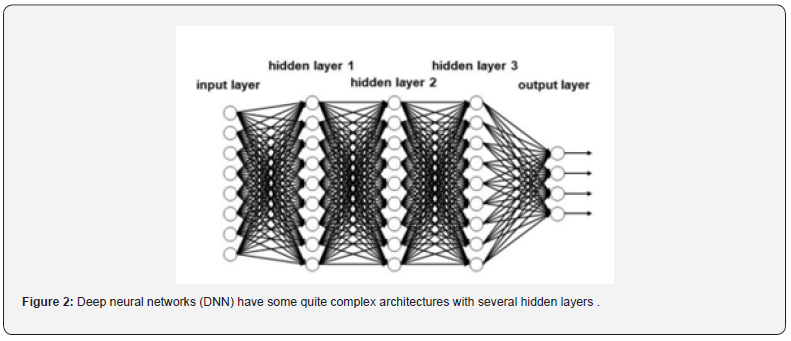

For establishing a relationship between the molecular descriptors and the property, values, so-called weights, have to be attributed to the connections between the neurons. This is most often achieved by the so-called backpropagation algorithm by repeatedly presenting pairs of molecular descriptors and their properties; these iterations quite often go into the ten-thousands and more. An ANN has the advantage that the mathematical relationship between the input units and the output need not be specified or known; it is implicitly laid down in the weights and can also comprise non-linear relationships. It was tempting to envisage that with artificial neural networks the field of artificial intelligence was awakening again. And in fact, in recent years terms like deep learning or deep neural networks have appeared in many fields including chemistry that provide a renaissance to the domain of artificial intelligence. A deep neural network (observe that the network has been rotated by 90 degrees compared to the one in Figure 2).

Deep neural networks (DNN) have some quite complex architectures with several hidden layers .This has the consequence that many weights for the connections have to be determined in order to avoid overfitting. Novel approaches and algorithms had to be developed to obtain networks that have true predictivity. Deep neural networks need a large amount of data for training in order to obtain truly predictive models. Most applications of DNN have been made in drug design and in analyzing reaction data (vide infra).

Reaction Prediction and Computer-Assisted Synthesis Design (CASD)

These new systems use machine learning algorithms and deep neural networks to predict reactions and suggest synthesis pathways. The accuracy of these systems has improved significantly, with some achieving success rates of over 90% in predicting reactions and suggesting synthetic routes. Such systems have the potential to revolutionize the field of organic synthesis by enabling chemists to design new molecules and synthesize them more efficiently. However, there are still challenges to overcome, such as the need for more comprehensive reaction databases and the development of more accurate machine learning models. Nonetheless, the progress made in recent years suggests that computer-assisted synthesis design will become an increasingly important tool for organic chemists in the future.

The development of computer-assisted synthesis design (CASD) systems has been hindered until recently due to limited reaction databases and search procedures. However, with the availability of large reaction databases and novel data processing algorithms, CASD systems have been developed using machine learning algorithms and deep neural networks to predict reactions and suggest synthesis pathways. These systems have achieved high success rates in predicting reactions and suggesting synthetic routes, with the potential to revolutionize organic synthesis. Nonetheless, challenges remain, such as the need for more comprehensive reaction databases and more accurate machine learning models. Methods for calculating values for concepts like synthetic accessibility or current complexity have also been developed to assist chemists in selecting molecules for further investigation. The combination of large reaction databases with novel data processing algorithms has matured CASD systems, making them a valuable tool for organic chemists in planning laboratory work.

Computer-assisted synthesis design (CASD) systems have recently emerged as a valuable tool for organic chemists, facilitated by the availability of large reaction databases and novel data processing algorithms. Machine learning algorithms and deep neural networks have been used to predict reactions and suggest synthesis pathways, achieving high success rates. However, challenges remain, such as the need for more comprehensive reaction databases and more accurate machine learning models. Methods for calculating values for concepts like synthetic accessibility or current complexity have also been developed to assist chemists in selecting molecules for further investigation. The combination of large reaction databases with novel data processing algorithms has matured CASD systems, making them a promising tool for planning laboratory work. In addition, the use of bioinformatics and chemoinformatics methods in combination has led to interesting insights in the study of biochemical pathways, including the identification of major pathways for periodontal disease and the derivation of flavor-forming pathways in cheese by lactic acid bacteria. Finally, novel artificial intelligence methods such as deep learning architectures are being applied to metabolic pathway prediction, offering exciting possibilities for pathway redesign.

Cosmetics Products Discovery

In recent years, chemoinformatics and bioinformatics methods that have established their value in drug discovery such as molecular modeling, structure-based design, molecular dynamics simulations and gene expression have also been utilized in the development of new cosmetics products. Thus, novel skin moisturizers and anti-aging compounds have been developed. Legislation has been passed in the European Union with the Cosmetics Directive that no chemicals are longer allowed to be added to cosmetics products that have been tested on animals. This has given a large push to the establishment of computer models for the prediction of toxicity of chemicals to be potentially included in cosmetics products.

Material Science

The prediction of the properties of materials is probably the most active area of chemoinformatics. The properties that are investigated range from properties of nanomaterials, materials from regenerative medicine, solar cells, homogeneous or heterogeneous catalysts, electrocatalysts, phase diagrams, ceramics, or the properties of supercritical solvents. Reviews have appeared. In most cases, the chemical structure of the material investigated is not known and therefore other types of descriptors have to be chosen to represent a material for a QSAR study. Materials could be represented by physical properties such as refractive index or melting point, spectra, the components or the conditions for the production of the material, etc. Use of chemoinformatics methods in material science are particularly opportune as in most cases the properties of interest depend on many parameters and cannot be directly calculated. A QSAR model would allow the design of new materials with the desired property.

Process Control

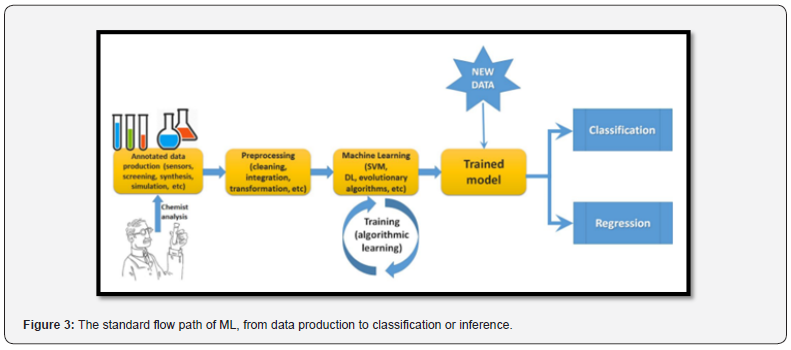

The problem of the detection of faults in chemical processes and process control have benefited quite early on from the application of artificial neural networks. An overall course on the application of artificial intelligence in process control has been developed by six European universities. Chemical processes rapidly generate a host of data on flow of chemicals, concentration, temperature, pressure, product distribution, etc. This data have to be used to recognize potential faults in the process and rapidly bring the process back to optimum. The relationships between the various data produced by sensors and the amount of desired product cannot be explicitly given, making it an ideal case for the application of powerful data modeling techniques. Quite a few excellent results have been obtained for such processes as Petrochemical or pharmaceutical processes, water treatment, agriculture, iron manufacture, exhaust gas denitration, distillation column operation, etc. (Figure 3).

Identification of Compounds with Genetic Algorithms

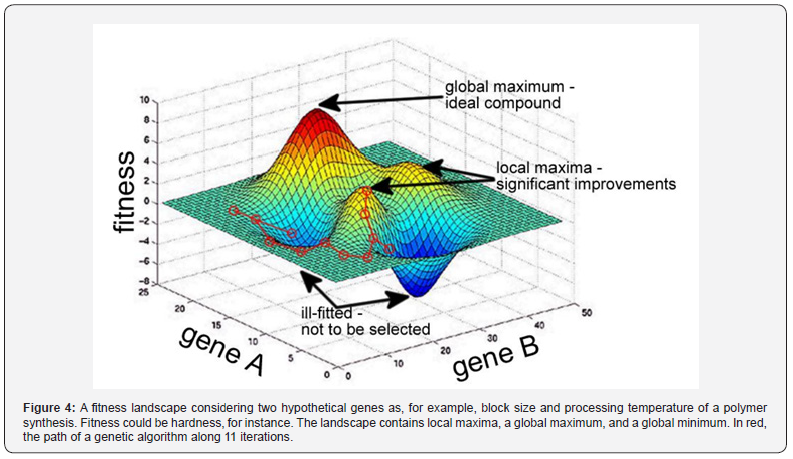

Bio-inspired computing involves the development of procedures that mimic mechanisms observed in natural environments. For example, genetic algorithms are inspired by Charles Darwin’s concept of evolution and use the principle of “survival of the fittest” to facilitate optimization. In this process, active compounds interbreed and mutate to produce new compounds with new properties. Compounds that show useful properties are selected for further synthesis, while those that do not have useful properties are ignored. After several generations, new functional compounds appear with inherited and acquired properties. This process requires accurate composition modeling, appropriate mutation methods, and robust fitness assessments that can be obtained computationally, reducing the need for expensive experiments. In genetic algorithms, each compositional or structural feature of a compound is considered a gene. Chemical genes include factors such as individual components, polymer block size, monomer compositions, and processing temperature. Genome contains all the genes of a combination, and the resulting properties are known as phenotypes. Genetic algorithms scan the search space of gene domains to identify the most suitable phenotypes based on a fitness function. The relationship between genome and phenotype creates a fitness perspective, as shown in the figure below for two hypothetical genes related to polymer synthesis processes aimed at high hardening rates. The fitness perspective is implicit in the problem model defined by gene domains and fitness function.

The genetic algorithm moves along the landscape generating new combinations while avoiding combinations that do not improve fitness. This mechanism increases the probability of achieving the desired properties. Bio-inspired computing involves mimicking natural mechanisms to develop optimization methods. Genetic algorithms, inspired by Charles Darwin’s concept of evolution, use the principle of “survival of the fittest” to create new combinations with new characteristics. Each trait is considered a combination of a gene, and the genome includes all the genes of a combination, resulting in a phenotype. Genetic algorithms scan the search space of gene domains to identify the most suitable phenotypes based on a fitness function. The relationship between genome and phenotype creates a fitness landscape that is implicit in the problem model defined by gene domains and fitness functions. This mechanism increases the probability of achieving the desired properties. The effectiveness of genetic algorithms has been shown in various fields, including catalyst optimization and material science (Figure 4).

Synthesis Prediction Using ML

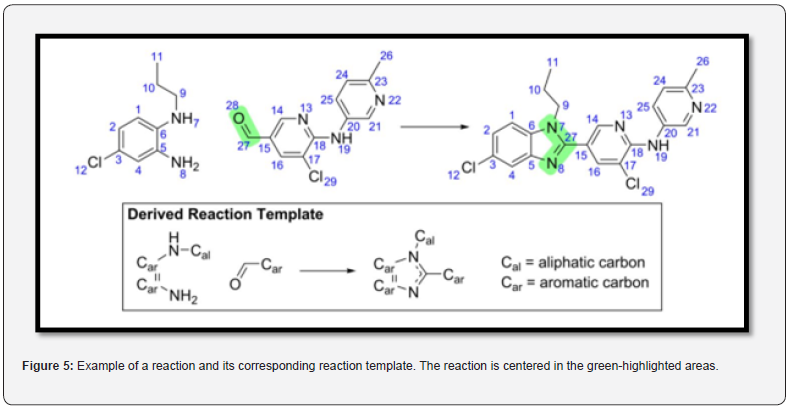

The challenging task of synthesizing new compounds, particularly in organic chemistry, has led to the search for machine-based methods that can predict the molecules produced from a given set of reactants and reagents. Corey and Wipke’s 1969 approach used templates produced by expert chemists to define atom connectivity rearrangement under specific conditions. However, limited template sets prevented their method from encompassing a wide range of conditions. The use of templates or rules to transfer knowledge from human experts to computers has gained renewed interest due to the potential for automatic rule generation using big datasets, as seen in medicine and materials science. Machine learning methods, such as neural networks, have been successful in predicting retrosynthesis outcomes using large datasets. Deep learning appears to be the most promising approach for exploring large search spaces. Recent experiments using link prediction ML algorithms on a knowledge graph built from millions of binary reactions have shown high accuracy in predicting products and detecting unlikely reactions (Figure 5).

The Corresponding Template Includes the Reaction Center and Nearby Functional Groups

Reproduced from the search for machine-based methods to predict the molecules produced from a given set of reactants and reagents in organic chemistry has led to the use of templates and rules to transfer knowledge from human experts to computers. However, limited template sets have prevented these methods from encompassing a wide range of conditions. Recent experiments using machine learning (ML) methods, such as neural networks and deep learning (DL), have shown high accuracy in predicting products and detecting unlikely reactions. DL appears to be the most promising approach for exploring large search spaces and has been used to predict outcomes of chemical reactions by assimilating latent patterns for generalizing out of a pool of examples. The SMILES chemical language has been employed in DL models to represent molecular structures as graphs and strings amenable to computational processing. DNNs have been used to predict reactions with superior performance compared to previous rule-based expert systems, achieving an accuracy of 97% on a validation set of 1 million reactions. Additionally, DNNs have been used to map discrete molecules into a continuous multidimensional space, where it is possible to predict properties of existing vectors and predict new vectors with certain properties. Optimization techniques can then be employed to search for the best candidate molecules.

Learning from data has always been a cornerstone of chemical research. In the last sixty years computer methods have been introduced in chemistry to convert data into information and then derive knowledge from this information. This has led to the establishment of the field of chemoinformatics that has undergone impressive developments over the last 60 years and found applications in most areas of chemistry from drug design to material science. Artificial intelligence techniques have recently seen a rebirth in chemistry and will have to be optimized to also allow us to understand the basic foundations of chemical data. This review examines the growth and distribution of AI-related chemistry publications over the past two decades using the CAS Content Collection.

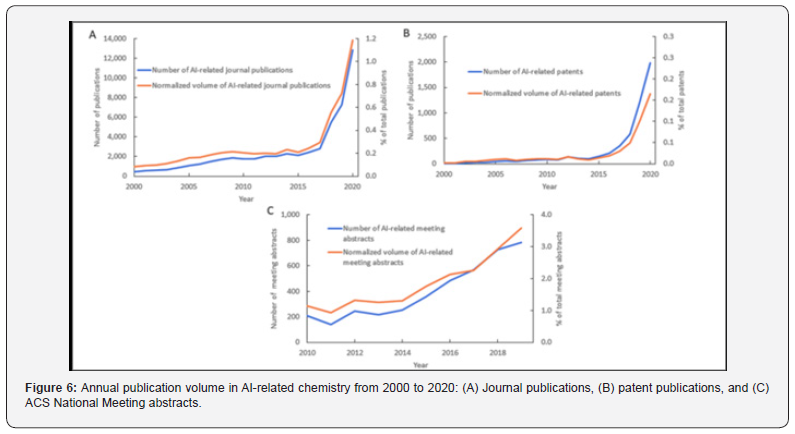

The volume of journal and patent publications has increased significantly, particularly since 2015. Analytical chemistry and biochemistry have integrated AI to the greatest extent and with the highest growth rates. Interdisciplinary research trends were also identified, along with emerging associations of AI with certain chemistry research topics. Notable publications in various chemistry disciplines were evaluated to highlight emerging use cases. The occurrence of different classes of substances and their roles in AI-related chemistry research were quantified, detailing the popularity of AI adoption in life sciences and analytical chemistry. AI can be applied to various tasks in chemistry, where complex relationships are often present in data sets. AI implementations have dramatically reduced design and experimental effort by enabling laboratory automation, predicting bioactivities of new drugs, optimizing reaction conditions, and suggesting synthetic routes to complex target molecules. This review contextualizes the current AI landscape in chemistry, providing an understanding of its future directions (Figure 6).

This review analyzes the growth and distribution of AIrelated chemistry publications over the past two decades using the CAS content collection. The volume of journal publications and patents has increased significantly since 2015 in particular, as analytical chemistry and biochemistry have integrated artificial intelligence with the largest amount and the highest growth rate. Interdisciplinary research trends were identified, along with emerging connections of artificial intelligence to specific chemistry research topics. Significant publications in various chemistry disciplines were evaluated to highlight emerging applications. The popularity of artificial intelligence adoption in life sciences and analytical chemistry was determined by examining the occurrence of different classes of materials and their role in artificial intelligence-related chemistry research. Artificial intelligence has reduced experimental design and effort by enabling laboratory automation, predicting the bioactivities of new drugs, optimizing reaction conditions, and suggesting synthetic routes to complex target molecules. This review examines the current landscape of artificial intelligence in chemistry and provides insight into its future directions. The CAS content corpus was searched to identify publications related to artificial intelligence from 2000 to 2020 based on various artificial intelligence terms in the title, keywords, abstract text, and concepts selected by CAS experts. Approximately 70,000 journal publications and 17,500 patents from the CAS content collection were identified as related to AI. The number of journal publications and patents increased over time and showed similar fast-growing trends after 2015. The proportion of research related to artificial intelligence is increasing, indicating an absolute increase in research efforts for artificial intelligence in chemistry.

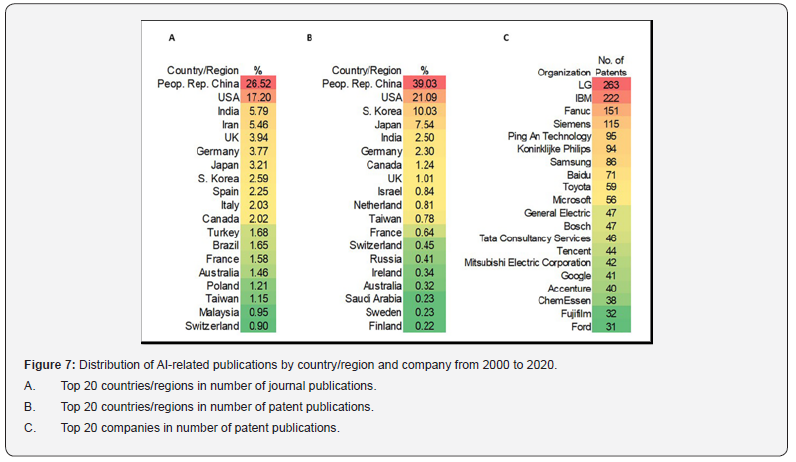

This review analyzes the growth and distribution of AI-related chemistry publications over the past two decades using the CAS Content Collection. The volume of journal and patent publications has significantly increased, especially since 2015, with analytical chemistry and biochemistry integrating AI to the greatest extent and with the highest growth rates. Interdisciplinary research trends were identified, along with emerging associations of AI with specific chemistry research topics. Notable publications in various chemistry disciplines were evaluated to highlight emerging use cases. The popularity of AI adoption in life sciences and analytical chemistry was quantified by examining the occurrence of different classes of substances and their roles in AI-related chemistry research. AI has reduced design and experimental effort by enabling laboratory automation, predicting bioactivities of new drugs, optimizing reaction conditions, and suggesting synthetic routes to complex target molecules. This review contextualizes the current AI landscape in chemistry and provides an understanding of its future directions. The CAS Content Collection was searched to identify AI-related publications from 2000 to 2020 based on various AI terms in their title, keywords, abstract text, and CAS expert-curated concepts. Roughly 70,000 journal publications and 17,500 patents from the CAS Content Collection were identified as related to AI. The numbers of both journal and patent publications increased with time, showing similar rapidly growing trends after 2015. The proportion of AI-related research is increasing, suggesting an absolute increase in research effort toward AI in chemistry (Figure 7).

Here, the growth and distribution of AI-related chemistry publications over the past two decades is reviewed using the CAS content collection. The volume of journal publications and patents has increased significantly, especially since 2015, with analytical chemistry and biochemistry incorporating AI the most and with the highest growth rates. Interdisciplinary research trends were identified, along with emerging connections of artificial intelligence to specific chemistry research topics. Significant publications in various chemistry disciplines were evaluated to highlight emerging applications. The popularity of artificial intelligence adoption in life sciences and analytical chemistry was determined by examining the occurrence of different classes of materials and their role in artificial intelligence-related chemistry research. Artificial intelligence has reduced experimental design and effort by enabling laboratory automation, predicting the bioactivities of new drugs, optimizing reaction conditions, and suggesting synthetic routes to complex target molecules. This review examines the current landscape of artificial intelligence in chemistry and provides insight into its future directions. Then the countries/regions and organizations of origin of AIrelated chemistry documents were extracted to determine their distribution. China and the United States had the highest number of publications for both journal articles and patents. Medical diagnostics developers and technology companies make up a large portion of commercial licensees for chemical AI research. Publication trends in specific research areas were also analyzed, and analytical chemistry had the highest normalized volume in recent years. Energy technology and environmental chemistry and industrial chemistry and chemical engineering are also growing fields in terms of research related to artificial intelligence. Biochemistry is heavily featured in AI-related patent publications, likely due to its use in drug research and development.

This article examines the growth and distribution of AI-related chemistry publications using the CAS Content Collection over the past two decades. The volume of journal and patent publications has significantly increased, particularly since 2015, with analytical chemistry and biochemistry integrating AI to the greatest extent and with the highest growth rates. Interdisciplinary research trends were identified, along with emerging associations of AI with specific chemistry research topics. Notable publications in various chemistry disciplines were evaluated to highlight emerging use cases. The popularity of AI adoption in life sciences and analytical chemistry was quantified by examining the occurrence of different classes of substances and their roles in AI-related chemistry research. AI has reduced design and experimental effort by enabling laboratory automation, predicting bioactivities of new drugs, optimizing reaction conditions, and suggesting synthetic routes to complex target molecules.

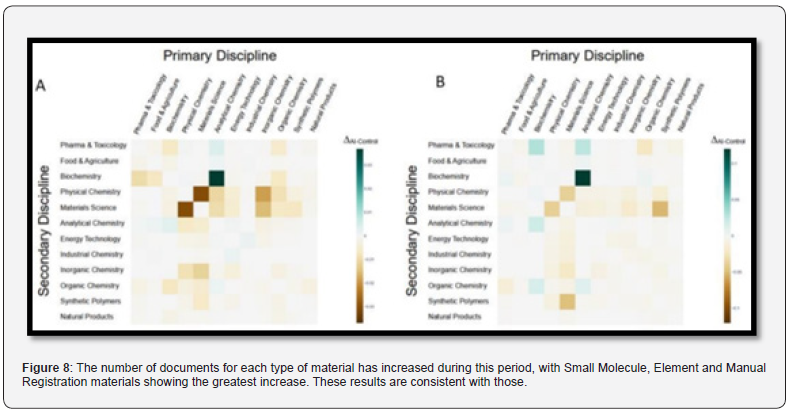

This review contextualizes the current AI landscape in chemistry and provides an understanding of its future directions. The countries/regions and organizations of origin for AI-related chemistry documents were then extracted to determine their distributions. China and the United States contributed the largest numbers of publications for both journal articles and patents. Medical diagnostic developers and technology companies make up a large portion of the commercial patent assignees for AI chemical research. Trends of publications in specific research areas were also analyzed, with Analytical Chemistry having the highest normalized volume in recent years. Energy Technology and Environmental Chemistry and Industrial Chemistry and Chemical Engineering are also growing fields in terms of AIrelated research. Biochemistry is highly represented in AI-related patent publications, possibly due to its use in drug research and development. Interdisciplinary relationships do appear in AIrelated chemistry research, demonstrating how AI can be applied in research areas where the relationships between available data in separate domains are not obvious to researchers (Figure 8).

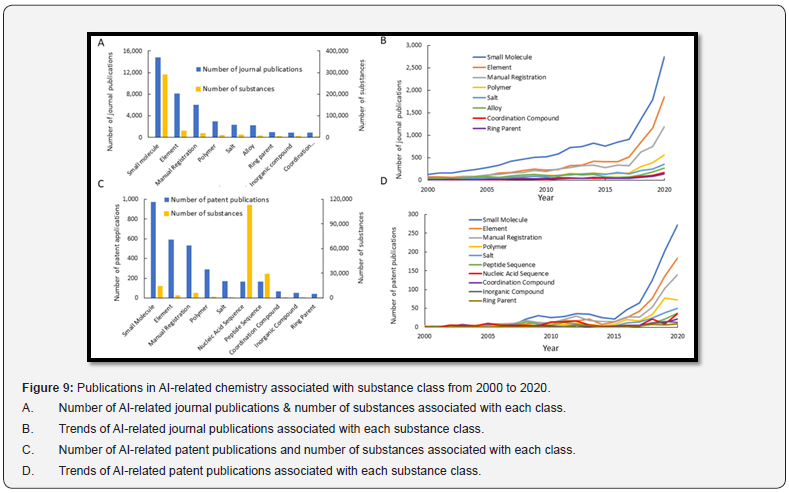

Difference in proportion of total AI-related publications and control group (non-AI related) by interdisciplinary pair: (A) journal publications and (B) patent publications. The distribution of AI-related research in chemistry can be analyzed by examining the number of papers related to different classes of materials. Overcoming challenges in material representation and data availability is critical to implementing artificial intelligence in chemistry. Therefore, identifying the most common types of materials studied in the literature can indicate areas where researchers have successfully addressed these challenges. CAS classifies materials into several classes, and the figure below shows the number of AI-related journal publications for common material classes, including alloy, coordinated compound, element, hand register, ring parent, small molecule, polymer, salt, and inorganic compound. Gives. Publications containing Small Molecule materials have the largest number, followed by publications containing Element and Manual Registration materials. This trend is probably due to the relative simplicity and ease of modeling of these materials compared to Coordination Compound and Polymer. The high number of documents containing manual recording material corresponds to the high volume of publications in biochemistry. The figure below also shows the total number of materials in AI-related journal publications for each material class. The process in substance counting is similar to document counting, although skewed by the higher number of small molecule substances in each document (Figure 9).

The popularity of AI applications in chemistry has grown rapidly in recent years, with some fields further along in adoption than others. The success of AI implementation is linked to the availability and quality of data, as well as opportunities for gaining insights and automating repetitive tasks. Analytical chemistry and biochemistry have seen significant AI deployment due to the availability of large training sets and data for macromolecules. The popularity of AI applications in drug discovery is reflected in the large number of publications involving small molecules. The increased availability of software and hardware tools, research area-specific data sets, and researcher expertise have contributed to the growth of AI in chemistry. While AI has been successfully adapted to many areas of chemical research, there are still areas where its impact has yet to be felt. With continued improvements in AI and interdisciplinary research, these areas may see increased adoption in the future.

Glasser’s Choice Theory

Choice theory posits that the behaviors we choose are central to our existence. Our behavior (choices) is driven by five genetically driven needs in hierarchical order: survival, love, power, freedom, and fun. The most basic human needs are survival (physical component) and love (mental component). Without physical (nurturing) and emotional (love), an infant will not survive to attain power, freedom, and fun.

“No matter how well-nourished and intellectually stimulated a child is, going without human touch can stunt his mental, emotional, and even physical growth”.

Choice theory suggests the existence of a “quality world.” The idea of a “quality world” in choice theory has been compared to Jungian archetypes, but Glasser’s acknowledgement of this connection is unclear. Some argue that Glasser’s “quality world” and what Jung would call healthy archetypes share similarities. Our “quality world” images are our role models of an individual’s “perfect” world of parents, relations, possessions, beliefs, etc. How each person’s “quality world” is somewhat unusual, even in the same family of origin, is taken for granted.

Starting from birth and continuing throughout our lives, each person places significant role models, significant possessions, and significant systems of belief (religion, cultural values, icons, etc.) into a mostly unconscious framework Glasser called our “quality world”. The issue of negative role models and stereotypes is not extensively discussed in choice theory. Glasser also posits a “comparing place,” where we compare and contrast our perceptions of people, places, and things immediately in front of us against our ideal images (archetypes) of these in our Quality World framework. Our subconscious pushes us towards calibrating-as best we can-our real-world experience with our quality world (archetypes). Behavior (“total behavior” in Glasser’s terms) is made up of these four components: acting, thinking, feeling, and physiology. Glasser suggests we have considerable control or choice over the first two of these, yet little ability to directly choose the latter two as they are more deeply sub- and unconscious. These four components remain closely intertwined, and the choices we make in our thinking and acting will greatly affect our feelings and physiology.

Glasser frequently emphasizes that failed or strained relationships with significant individuals can contribute to personal unhappiness. Spouses, parents, children, friends, and colleagues. The symptoms of unhappiness are widely variable and are often seen as mental illnesses. Glasser believed that “pleasure” and “happiness” are related but far from synonymous. Sex, for example, is a “pleasure” but may well be divorced from a “satisfactory relationship,” which is a precondition for lasting “happiness” in life. Hence the intense focus on the improvement of relationships in counseling with choice theory-the “new reality therapy”. Individuals who are familiar with both reality therapy and choice theory may have a preference for the latter, which is considered a more modern approach. According to choice theory, mental illness can be linked to personal unhappiness. Glasser champions how we are able to learn and choose alternate behaviors that result in greater personal satisfaction. Reality therapy is a choice theory-based counseling process focused on helping clients learn to make those self-optimizing choices [1].

The Ten Axioms of Choice

a. The only person whose behavior we can control is ourselves.

b. All we can give another person is information.

c. All long-lasting psychological problems are relationship problems.

d. The problem relationship is always part of our present life.

e. What happened in the past has everything to do with who we are today, but we can only satisfy our basic needs right now and plan to continue satisfying them in the future.

f. We can only satisfy our needs by satisfying the pictures in our quality world.

g. All we do is behave.

h. All behavior is total behavior and is made up of four components: acting, thinking, feeling, and physiology.

i. All of our total behavior is chosen, but we only have direct control over the acting and thinking components. We can only control our feelings and physiology indirectly through how we choose to act and think.

j. All total behavior is designated by verbs and named by the part that is the most recognizable.

In Classroom Management

William Glasser’s choice theory begins: Behavior is not separate from choice; we all choose how to behave at any time. Second, we cannot control anyone’s behavior but our own. Glasser emphasized the importance of classroom meetings as a means to improve communication and solve classroom problems. Glasser suggested that teachers should assist students in envisioning a fulfilling school experience and planning the choices that would enable them to achieve it. For example, Johnny Waits is an 18-year-old high school senior and plans on attending college to become a computer programmer. Glasser suggests that Johnny could be learning as much as he can about computers instead of reading Plato. Glasser proposed a curriculum approach that emphasizes practical, real-world topics chosen by students based on their interests and inclinations. This approach is referred to as the quality curriculum. The quality curriculum places particular emphasis on topics that have practical career applications. According to Glasser’s approach, teachers facilitate discussions with students to identify topics they are interested in exploring further when introducing new material. In line with Glasser’s approach, students are expected to articulate the practical value of the material they choose to explore[2].

Education

Glasser did not endorse Summerhill, and the quality schools he oversaw typically had conventional curriculum topics. The main innovation of these schools was a deeper, more humanistic approach to the group process between teachers, students, and learning.

Critiques

In a book review, [3] Christopher White writes that Glasser believes everything in the DSM-IV-TR is a result of an individual’s brain creatively expressing its unhappiness. White also notes that Glasser criticizes the psychiatric profession and questions the effectiveness of medications in treating mental illness. White points out that the book does not provide a set of randomized clinical trials demonstrating the success of Glasser’s teachings.

Quality of World

The Quality Realm is central to William Glasser’s views. A person’s values and priorities are represented in the brain as mental images in a space called the Superior Realm. Photographs of significant people, locations, ideas, and convictions could be filed away in their minds. According to Glasser, the pictures in a human’s Supreme bring them joy and fulfill some fundamental needs. Such images are personal and not subject to any societal norm checking. The Quality Planet is our ideal utopia.

Working of Glasser’s Choice Theory

Clients’ emotions and physiological reactions to stress may be improved via habitual and cognitive modification instruction. The educational field is a perfect illustration of this. Disappointed pupils who are unable to master particular ideas as well as acquire particular talents may be instructed to rethink what they consider a high-quality universe. Some changes can be implemented to emphasize the company’s history of problematic habits and encourage them to identify and address the underlying causes of that habit to prevent a recurrence. An essay on Schema Therapy states that both Theory and its subset, Reality Psychotherapy, do not focus on the past. Customers are urged to live in the now. They encourage people to consider alterations to their routine that could bring about more satisfying outcomes.

Conclusion of session Glasser’s Choice Theory

Glasser’s original version of the Choice Theory posits that at our most fundamental level, choices are made to satisfy a set of requirements. It is hypothesized that people want to choose the option they believe would be best for them, which does not imply that bad judgments will not have negative results. It has been shown, meanwhile, that Psychodynamic Approach may aid individuals in developing more effective approaches to managing issues. Choice Theory originates in traditional habits and has contributed greatly too many academic disciplines.

Game Theory

Game theory is the study of mathematical models of strategic interactions among rational agents. [1]It has applications in all fields of social science, as well as in logic, systems science and computer science. The concepts of game theory are used extensively in economics as well. [2] The traditional methods of game theory addressed two-person zero-sum games, in which each participant’s gains or losses are exactly balanced by the losses and gains of other participants. In the 21st century, the advanced game theories apply to a wider range of behavioral relations; it is now an umbrella term for the science of logical decision making in humans, animals, as well as computers.

Modern game theory began with the idea of mixed-strategy equilibria in two-person zero-sum game and its proof by John von Neumann. Von Neumann’s original proof used the Brouwer fixed-point theorem on continuous mappings into compact convex sets, which became a standard method in game theory and mathematical economics. His paper was followed by the 1944 book Theory of Games and Economic Behavior, co-written with Oskar Morgenstern, which considered cooperative games of several players. [3] The second edition of this book provided an axiomatic theory of expected utility, which allowed mathematical statisticians and economists to treat decision-making under uncertainty. Therefore, it is evident that game theory has evolved over time with consistent efforts of mathematicians, economists and other academicians. Game theory was developed extensively in the 1950s by many scholars. It was explicitly applied to evolution in the 1970s, although similar developments go back at least as far as the 1930s. Game theory has been widely recognized as an important tool in many fields. As of 2020, with the Nobel Memorial Prize in Economic Sciences going to game theorists Paul Milgrom and Robert B. Wilson, fifteen game theorists have won the economics Nobel Prize. John Maynard Smith was awarded the Crafoord Prize for his application of evolutionary game theory [4- 12].

Different types of Games

Cooperative / Non-Cooperative

A game is cooperative if the players are able to form binding commitments externally enforced (e.g. through contract law). A game is non-cooperative if players cannot form alliances or if all agreements need to be self-enforcing (e.g., through credible threats). [13] Cooperative games are often analyzed through the framework of cooperative game theory, which focuses on predicting which coalitions will form, the joint actions that groups take, and the resulting collective payoffs. It is opposed to the traditional non-cooperative game theory which focuses on predicting individual players’ actions and payoffs and analyzing Nash equilibria.[14,15] The focus on individual payoff can result in a phenomenon known as Tragedy of the Commons, where resources are used to a collectively inefficient level. The lack of formal negotiation leads to the deterioration of public goods through over-use and under provision that stems from private incentives. [16]Cooperative game theory provides a high-level approach as it describes only the structure, strategies, and payoffs of coalitions, whereas non-cooperative game theory also looks at how bargaining procedures will affect the distribution of payoffs within each coalition.

As non-cooperative game theory is more general, cooperative games can be analyzed through the approach of non-cooperative game theory (the converse does not hold) provided that sufficient assumptions are made to encompass all the possible strategies available to players due to the possibility of external enforcement of cooperation. While using a single theory may be desirable, in many instances insufficient information is available to accurately model the formal procedures available during the strategic bargaining process, or the resulting model would be too complex to offer a practical tool in the real world. In such cases, cooperative game theory provides a simplified approach that allows analysis of the game at large without having to make any assumption about bargaining powers.

Symmetric / Asymmetric

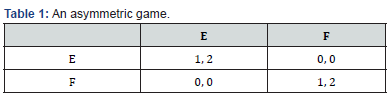

A symmetric game is a game where the payoffs for playing a particular strategy depend only on the other strategies employed, not on who is playing them. That is, if the identities of the players can be changed without changing the payoff to the strategies, then a game is symmetric. Many of the commonly studied 2×2 games are symmetric. The standard representations of chicken, the prisoner’s dilemma, and the stag hunt are all symmetric games. Some scholars would consider certain asymmetric games as examples of these games as well. However, the most common payoffs for each of these games are symmetric. The most commonly studied asymmetric games are games where there are not identical strategy sets for both players. For instance, the ultimatum game and similarly the dictator game have different strategies for each player. It is possible, however, for a game to have identical strategies for both players yet be asymmetric. For example, the game pictured in this section’s graphic is asymmetric despite having identical strategy sets for both players (Table 1).

Zero-Sum / Non-Zero-Sum

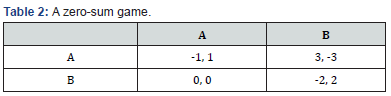

Zero-sum games (more generally, constant-sum games) are games in which choices by players can neither increase nor decrease the available resources. In zero-sum games, the total benefit goes to all players in a game, for every combination of strategies, always adds to zero (more informally, a player benefits only at the equal expense of others). [17] Poker exemplifies a zero-sum game (ignoring the possibility of the house’s cut), because one wins exactly the amount one’s opponents lose. Other zero-sum games include matching pennies and most classical board games include Go and chess (Table 2).Many games studied by game theorists (including the famed prisoner’s dilemma) are non-zero-sum games, because the outcome has net results greater or less than zero. Informally, in non-zero-sum games, a gain by one player does not necessarily correspond with a loss by another. Constant-sum games correspond to activities like theft and gambling, but not to the fundamental economic situation in which there are potential gains from trade. It is possible to transform any constant-sum game into a (possibly asymmetric) zero-sum game by adding a dummy player (often called “the board”) whose losses compensate the players’ net winnings.

Simultaneous / Sequential

Simultaneous games are games where both players move simultaneously, or instead the later players are unaware of the earlier players’ actions (making them effectively simultaneous). Sequential games (or dynamic games) are games where players do not make decisions simultaneously, and the player’s earlier actions affect the outcome and decisions of other players. [18] This need not be perfect information about every action of earlier players; it might be very little knowledge. For instance, a player may know that an earlier player did not perform one particular action, while they do not know which of the other available actions the first player actually performed. The difference between simultaneous and sequential games is captured in the different representations discussed above. Often, normal form is used to represent simultaneous games, while extensive form is used to represent sequential ones. The transformation of extensive to normal form is one way, meaning that multiple extensive form games correspond to the same normal form. Consequently, notions of equilibrium for simultaneous games are insufficient for reasoning about sequential games.

Perfect Information and Imperfect Information

A game of imperfect information. The dotted line represents ignorance on the part of player 2, formally called an information set. An important subset of sequential games consists of games of perfect information. A game with perfect information means that all players, at every move in the game, know the previous history of the game and the moves previously made by all other players. In reality, this can be applied to firms and consumers having information about the price and quality of all the available goods in a market. [19] An imperfect information game is played when the players do not know all moves already made by the opponent such as a simultaneous move game.[20] Most games studied in game theory are imperfect-information games.[citation needed] Examples of perfect-information games include tic-tactoe, checkers, chess, and go [21-23]. Many card games are games of imperfect information, such as poker and bridge.[24] Perfect information is often confused with complete information, which is a similar concept pertaining to the common knowledge of each player’s sequence, strategies, and payoffs throughout gameplay. [25] Complete information requires that every player know the strategies and payoffs available to the other players but not necessarily the actions taken, whereas perfect information is knowledge of all aspects of the game and players.[26] Games of incomplete information can be reduced, however, to games of imperfect information by introducing “moves by nature”.[27].

Bayesian Game

One of the assumptions of the Nash equilibrium is that every player has correct beliefs about the actions of the other players. However, there are many situations in game theory where participants do not fully understand the characteristics of their opponents. Negotiators may be unaware of their opponent’s valuation of the object of negotiation, companies may be unaware of their opponent’s cost functions, combatants may be unaware of their opponent’s strengths, and jurors may be unaware of their colleague’s interpretation of the evidence at trial. In some cases, participants may know the character of their opponent well, but may not know how well their opponent knows his or her own character. Bayesian game means a strategic game with incomplete information. For a strategic game, decision makers are players, and every player has a group of actions. A core part of the imperfect information specification is the set of states. Every state completely describes a collection of characteristics relevant to the player such as their preferences and details about them. There must be a state for every set of features that some players believe may exist.

Example of a Bayesian game

For example, where Player 1 is unsure whether Player 2 would rather date her or get away from her, while Player 2 understands Player 1’s preferences as before. To be specific, supposing that Player 1 believes that Player 2 wants to date her under a probability of 1/2 and get away from her under a probability of 1/2 (this evaluation comes from Player 1’s experience probably: she faces players who want to date her half of the time in such a case and players who want to avoid her half of the time). Due to the probability involved, the analysis of this situation requires understanding the player’s preference for the draw, even though people are only interested in pure strategic equilibrium.

Combinatorial Games

Games in which the difficulty of finding an optimal strategy stems from the multiplicity of possible moves are called combinatorial games. Examples include chess and go. Games that involve imperfect information may also have a strong combinatorial character, for instance backgammon. There is no unified theory addressing combinatorial elements in games. There are, however, mathematical tools that can solve some particular problems and answer some general questions. Games of perfect information have been studied in combinatorial game theory, which has developed novel representations, e.g., surreal numbers, as well as combinatorial and algebraic (and sometimes non-constructive) proof methods to solve games of certain types, including “loopy” games that may result in infinitely long sequences of moves. These methods address games with higher combinatorial complexity than those usually considered in traditional (or “economic”) game theory.[31,32] A typical game that has been solved this way is Hex. A related field of study, drawing from computational complexity theory, is game complexity, which is concerned with estimating the computational difficulty of finding optimal strategies. Research in artificial intelligence has addressed both perfect and imperfect information games that have very complex combinatorial structures (like chess, go, or backgammon) for which no provable optimal strategies have been found. The practical solutions involve computational heuristics, like alpha-beta pruning or use of artificial neural networks trained by reinforcement learning, which make games more tractable in computing practice.

Infinitely Long Games

Games, as studied by economists and real-world game players, are generally finished in finitely many moves. Pure mathematicians are not so constrained and set theorists in particular study games that last for infinitely many moves, with the winner (or other payoff) not known until after all those moves are completed. The focus of attention is usually not so much on the best way to play such a game, but whether one player has a winning strategy. (It can be proven, using the axiom of choice, that there are games - even with perfect information and where the only outcomes are “win” or “lose” - for which neither player has a winning strategy.) The existence of such strategies, for cleverly designed games, has important consequences in descriptive set theory.

Discrete and Continuous Games

Much of game theory is concerned with finite, discrete games that have a finite number of players, moves, events, outcomes, etc. Many concepts can be extended, however. Continuous games allow players to choose a strategy from a continuous strategy set. For instance, Cournot competition is typically modeled with players’ strategies being any non-negative quantities, including fractional quantities. Continuous games allow the possibility for players to communicate with each other under certain rules, primarily the enforcement of a communication protocol between the players. By communicating, players have been noted to be willing to provide a larger amount of goods in a public good game than they ordinarily would in a discrete game, and as a result, the players are able to manage resources more efficiently than they would in discrete games, as they share resources, ideas and strategies with one another. This incentivizes, and causes, continuous games to have a higher median cooperation rate. [35].

Differential Games

Differential games such as the continuous pursuit and evasion game are continuous games where the evolution of the players’ state variables is governed by differential equations. The problem of finding an optimal strategy in a differential game is closely related to the optimal control theory. In particular, there are two types of strategies: the open-loop strategies are found using the Pontryagin maximum principle while the closed-loop strategies are found using Bellman’s Dynamic Programming method. A particular case of differential games are the games with a random time horizon.[36] In such games, the terminal time is a random variable with a given probability distribution function. Therefore, the players maximize the mathematical expectation of the cost function. It was shown that the modified optimization problem can be reformulated as a discounted differential game over an infinite time interval.

Evolutionary Game Theory

Evolutionary game theory studies players who adjust their strategies over time according to rules that are not necessarily rational or farsighted.[37] In general, the evolution of strategies over time according to such rules is modeled as a Markov chain with a state variable such as the current strategy profile or how the game has been played in the recent past. Such rules may feature imitation, optimization, or survival of the fittest. In biology, such models can represent evolution, in which offspring adopt their parents’ strategies and parents who play more successful strategies (i.e., corresponding to higher payoffs) have a greater number of offspring. In the social sciences, such models typically represent strategic adjustment by players who play a game many times within their lifetime and, consciously or unconsciously, occasionally adjust their strategies. [38].

Stochastic Outcomes (And Relation to other Fields)

Individual decision problems with stochastic outcomes are sometimes considered “one-player games”. They may be modeled using similar tools within the related disciplines of decision theory, operations research, and areas of artificial intelligence, particularly AI planning (with uncertainty) and multi-agent system. Although these fields may have different motivators, the mathematics involved are substantially the same, e.g., using Markov decision processes (MDP).[39] Stochastic outcomes can also be modeled in terms of game theory by adding a randomly acting player who makes “chance moves” (“moves by nature”). [40] This player is not typically considered a third player in what is otherwise a two-player game, but merely serves to provide a roll of the dice where required by the game.

For some problems, different approaches to modeling stochastic outcomes may lead to different solutions. For example, the difference in approach between MDPs and the minimax solution is that the latter considers the worst-case over a set of adversarial moves, rather than reasoning in expectation about these moves given a fixed probability distribution. The minimax approach may be advantageous where stochastic models of uncertainty are not available but may also be overestimating extremely unlikely (but costly) events, dramatically swaying the strategy in such scenarios if it is assumed that an adversary can force such an event to happen. General models that include all elements of stochastic outcomes, adversaries, and partial or noisy observability (of moves by other players) have also been studied. The “gold standard” is considered to be partially observable in stochastic game (POSG), but few realistic problems are computationally feasible in POSG representation.

Metagames

These are games the play of which is the development of the rules for another game, the target or subject game. Metagames seek to maximize the utility value of the rule set developed. The theory of metagames is related to mechanism design theory. The term metagame analysis is also used to refer to a practical approach developed by Nigel Howard, whereby a situation is framed as a strategic game in which stakeholders try to realize their objectives by means of the options available to them. Subsequent developments have led to the formulation of confrontation analysis.

Pooling Games

These are games prevailing over all forms of society. Pooling games are repeated plays with changing payoff table in general over an experienced path, and their equilibrium strategies usually take a form of evolutionary social convention and economic convention. Pooling game theory emerges to formally recognize the interaction between optimal choice in one play and the emergence of forthcoming payoff table update path, identify the invariance existence and robustness, and predict variance over time. The theory is based upon topological transformation classification of payoff table update over time to predict variance and invariance and is also within the jurisdiction of the computational law of reachable optimality for ordered system.

Mean Field Game Theory

Mean field game theory is the study of strategic decision making in very large populations of small interacting agents. This class of problems was considered in the economics literature by Boyan Jovanovic and Robert W. Rosenthal, in the engineering literature by Peter E. Caines, and by mathematicians Pierre-Louis Lions and Jean-Michel Lasry.

Representation of Games

The games studied in game theory are well-defined mathematical objects. To be fully defined, a game must specify the following elements: the players of the game, the information and actions available to each player at each decision point, and the payoffs for each outcome. (Eric Rasmusen refers to these four “essential elements” by the acronym “PAPI”.) A game theorist typically uses these elements, along with a solution concept of their choosing, to deduce a set of equilibrium strategies for each player such that, when these strategies are employed, no player can profit by unilaterally deviating from their strategy. These equilibrium strategies determine an equilibrium to the game-a stable state in which either one outcome occurs or a set of outcomes occur with known probability.

In games, players typically have a ‘Dominant Strategy’, where they are incentivised to choose the best possible strategy that gives them the maximum payoff and stick to it even when the other player/s change their strategies or choose a different option. However, depending on the possible payoffs, one of the players may not possess a ‘Dominant Strategy, while the other player might. A player not having a dominant strategy is not a confirmation that another player won’t have a dominant strategy of their own, which puts the first player at an immediate disadvantage. However, there is the chance of both player’s possessing Dominant Strategies, when their chosen strategies and their payoffs are dominant, and the combined payoffs form an equilibrium. When this occurs, it creates a Dominant Strategy Equilibrium. This can be because of a Social Dilemma, where a game possesses an equilibrium created by two or multiple players who all have dominant strategies, and the game’s solution is different to what the cooperative solution to the game would have been.

There is also the chance of a player having more than one dominant strategy. This occurs when reacting to multiple strategies from a second player, and the first player’s separate responses having different strategies to each other. This means that there is no chance of a Nash Equilibrium occurring within the game. Most cooperative games are presented in the characteristic function form, while the extensive and the normal forms are used to define noncooperative games.

Extensive Form

The extensive form can be used to formalize games with a time sequencing of moves. Extensive form games can be visualized using game trees (as pictured here). Here each vertex (or node) represents a point of choice for a player. The player is specified by a number listed by the vertex. The lines out of the vertex represent a possible action for that player. The payoffs are specified at the bottom of the tree. The extensive form can be viewed as a multi-player generalization of a decision tree.[50-60] To solve any extensive form game, backward induction must be used. It involves working backward up the game tree to determine what a rational player would do at the last vertex of the tree, what the player with the previous move would do given that the player with the last move is rational, and so on until the first vertex of the tree is reached. The game pictured consists of two players.

The way this particular game is structured (i.e., with sequential decision making and perfect information), Player 1 “moves” first by choosing either F or U (fair or unfair). Next in the sequence, Player 2, who has now observed Player 1’s move, can choose to play either A or R. Once Player 2 has made their choice, the game is considered finished and each player gets their respective payoff, represented in the image as two numbers, where the first number represents Player 1’s payoff, and the second number represents Player 2’s payoff. Suppose that Player 1 chooses U and then Player 2 chooses A: Player 1 then gets a payoff of “eight” (which in realworld terms can be interpreted in many ways, the simplest of which is in terms of money but could mean things such as eight days of vacation or eight countries conquered or even eight more opportunities to play the same game against other players) and Player 2 gets a payoff of “two”. The extensive form can also capture simultaneous-move games and games with imperfect information. To represent it, either a dotted line connects different vertices to represent them as being part of the same information set (i.e., the players do not know at which point they are), or a closed line is drawn around them [60-70].

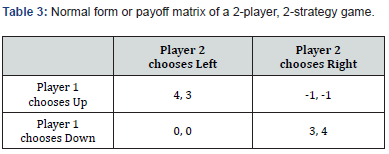

Normal Form

The normal (or strategic form) game is usually represented by a matrix which shows the players, strategies, and payoffs (see the example to the right). More generally it can be represented by any function that associates a payoff for each player with every possible combination of actions. In the accompanying example there are two players; one chooses the row and the other chooses the column. Each player has two strategies, which are specified by the number of rows and the number of columns. The payoffs are provided in the interior. The first number is the payoff received by the row player (Player 1 in our example); the second is the payoff for the column player (Player 2 in our example). Suppose that Player 1 plays up and that Player 2 plays Left. Then Player 1 gets a payoff of 4, and Player 2 gets 3 [70-90] (Table 3).

When a game is presented in normal form, it is presumed that each player acts simultaneously or, at least, without knowing the actions of the other. If players have some information about the choices of other players, the game is usually presented in extensive form. Every extensive-form game has an equivalent normal-form game, however, the transformation to normal form may result in an exponential blowup in the size of the representation, making it computationally impractical.

Chemical game theory is an alternative model of game theory that represents and solves problems in strategic interactions or contested human decision making. Differences with traditional game theory concepts include the use of metaphorical molecules called “knowlecules”, which represent choices and decisions among players in the game. Using knowlecules, entropic choices and the effects of preexisting biases are taken into consideration.

A game in chemical game theory is then represented in the form of a process flow diagram consisting of unit operations. The unit operations represent the decision-making processes of the players and have similarities to the garbage can model of political science. A game of N players, N being any integer greater than 1, is represented by N reactors in parallel. The concentrations that enter a reactor correspond to the bias that a player enters the game with. The reactions occurring in the reactors are comparable to the decision-making process of each player. The concentrations of the final products represent the likelihood of each outcome given the preexisting biases and pains for the situation [91-100].

Now that finally enough has been explained about the theory of games, I want to state the fact in simple words that it is actually possible to produce chemical compounds using artificial intelligence and good strategies such as game theory and selection theory. Easier and faster. The main thing that should be noted is that with this method, reaching the final answer, especially in the production of pharmaceutical products, is much simpler, more accurate, and faster.

To Know more about Recent Advances in Petrochemical Science

Click here: https://juniperpublishers.com/rapsci/index.php

To Know more about our Juniper Publishers

Click here: https://juniperpublishers.com/index.php

No comments:

Post a Comment